Real-Time Sound-to-Haptic Conversion

I focus on enhancing the immersive experiences of games by generating haptic effects automatically based on sound signals. The research is divided into three primary stages. The first stage involves detecting sounds suitable for haptic effects in a gameplay sound stream employing learning models. The second stage aims to generate corresponding haptic signals of various modalities, which can be done either manually or automatically based on the sound signals. The third stage involves rendering the haptic signals through appropriate haptic displays.

I have conducted three studies during my time as a graduate student.

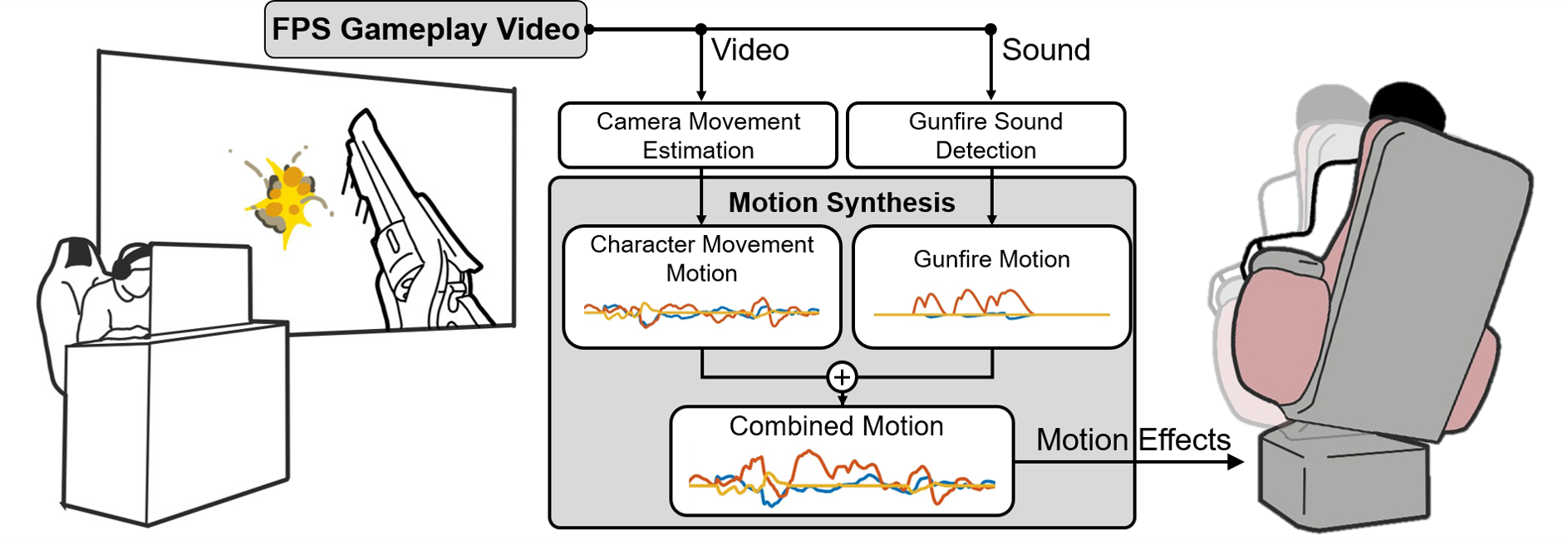

- Sound-to-Motion Conversion for Game Viewing

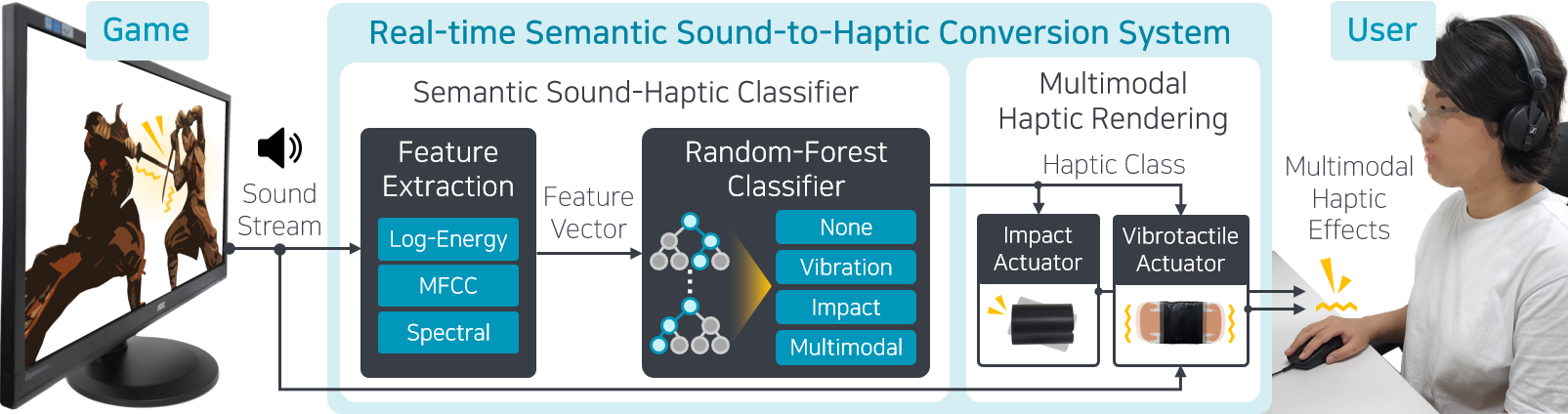

Detecting gunfire sounds in FPS gameplay videos and generating corresponding recoil motion effects to complement the audio cues. - Sound-to-Tactile Conversion for Game Playing

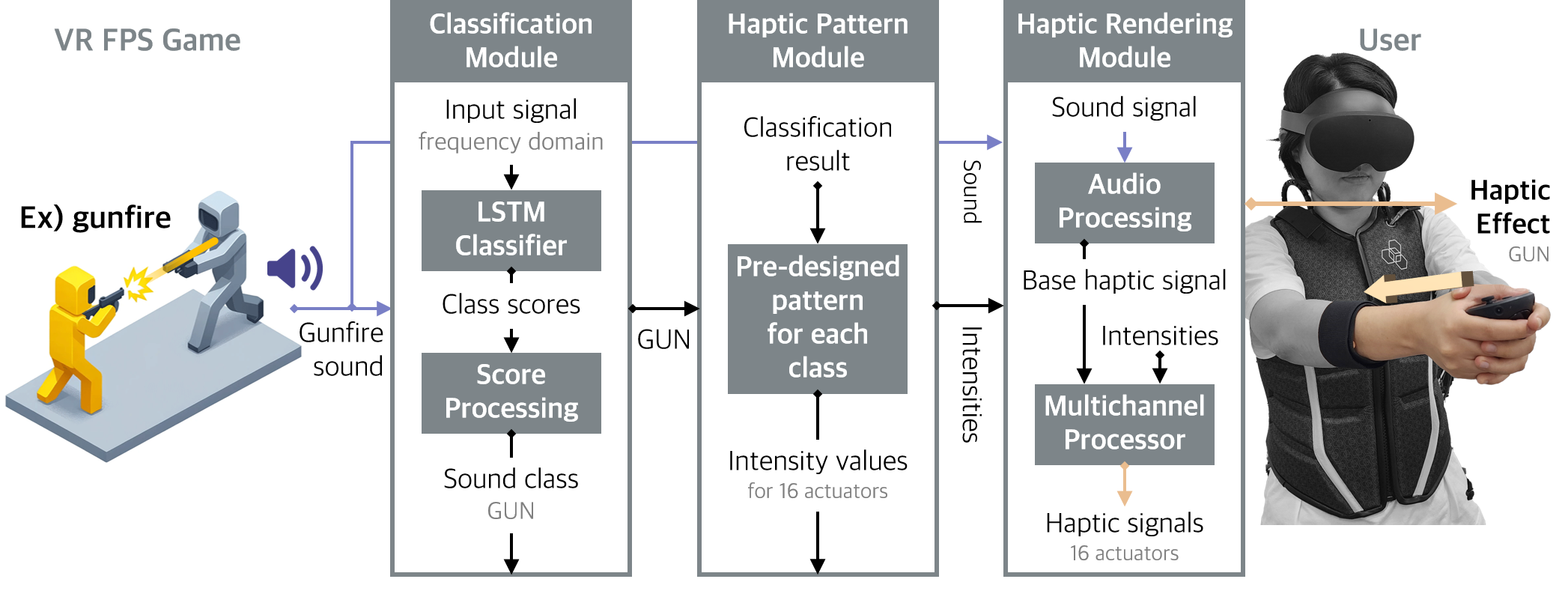

Providing multimodal (impact and vibration) haptic effects by detecting appropriate moments for haptic effects in real-time from sound signals. - Semantic Haptic Rendering for Game Playing (To be submitted)

Employing a deep learning model to classify the semantic classes of sound events and providing users with tailored full-body vibration patterns through a haptic suit.

For more details, please see the papers.